If you have a kubectl configured correctly, you can deploy Trandoshan in a simple command: The files are available here: and the containers images are available on docker hub. In fact there is a repository which hold all configurations files needed to deploy a production instance of Trandoshan on a Kubernetes cluster. Īs said before Trandoshan is designed to run on distributed systems and is available as docker image which make it a great candidate for the cloud.

Deep web iceberg diagram code#

The source code of Trandoshan is available on github here. Go is perfectly designed to build high performance distributed systems. The different processes are written using Go: because it offer a lot of performance (since it's compiled as native binary) and has a lot of library support. Instead of directly calling the database to check if an URL exist (which would add extra coupling to the database technology) the scheduler use to API: this allow sort of abstraction between database / processes. For example it is used by the Scheduler to determinate if a page has been already crawled. API: The process used by other processes to gather informations.Persister: The process responsible of content archiving: it read page content (message identified by subject "content") and store them into a NoSQL database (MongoDB).Scheduler: The process responsible of URL approval: this process read the "crawledUrls" messages, check if the URL is to be crawled (if the URL has not been already crawled) and If so, send the URL to NATS with subject "todoUrls".These extracted URLs are sent to NATS with subject "crawledUrls", and the page body (the whole content) is sent to NATS with subject "content". Crawler: The process responsible of crawling pages: it read URLs to crawl from NATS (message identified by subject "todoUrls"), crawl the page, and extract all URLs present in the page.Trandoshan is divided in 4 principal processes: This allow process concurrency (many instances can run at the same time without any bugs) and therefore increase performances. Each of these process will receive an unique URL to crawl. NATS allowing scaling: for example they can be 10 crawler processes reading URL from the messaging server. Each message in NATS has a subject (like an email) that allow other process to identify it and therefore to read only messages they want to read. The inter process communication (IPC) is mainly done using a messaging protocol known as NATS (yellow line in the diagram) based on the producers / consumers pattern. onionīefore talking about the responsibility of each process it is important to understand how they talk to each others.

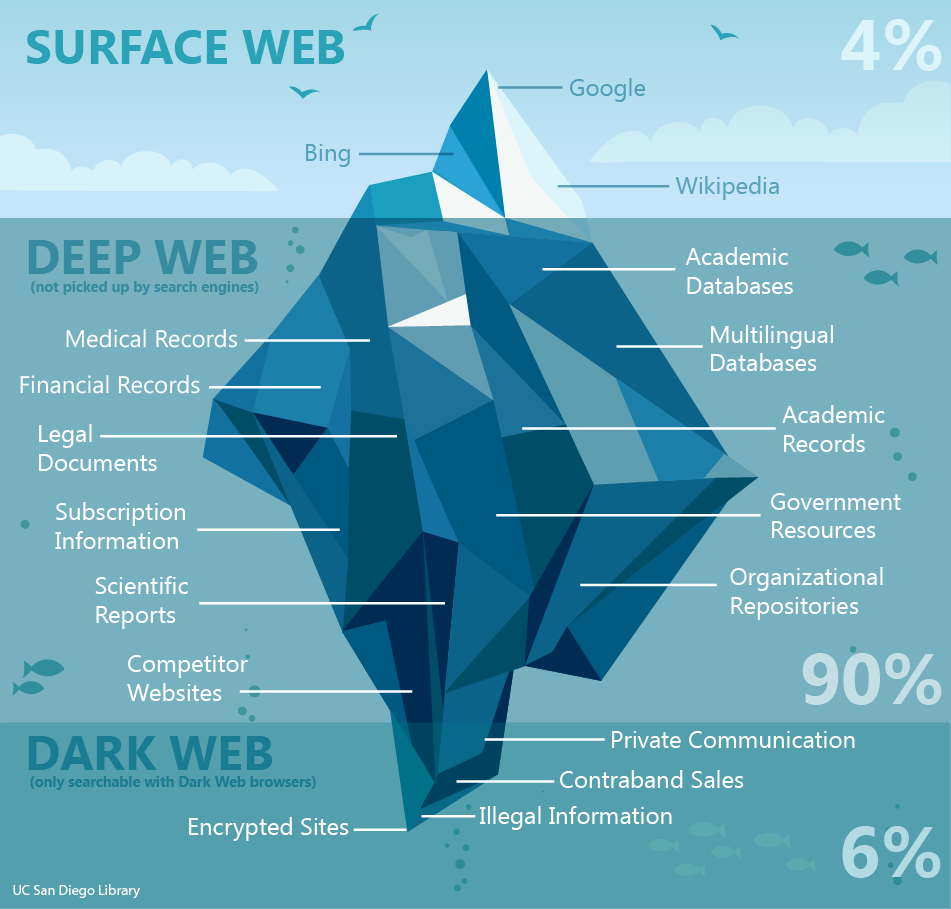

They can be accessed using special URL who ends with. The most famous dark web is the hidden services built on the tor network. You'll need to use a particular application or a special proxy. The Dark Web is a part of the web that you't cannot access using a regular browser.The Deep Web is a part of the web non indexed, It means that you cannot find these websites using a search engine but you'll need to access them by knowing the associated URL / IP address.It's indexed by popular web crawler such as Google, Qwant, Duckduckgo, etc. The Surface Web, or Clear Web is the part that we browse everyday.The web is designed is composed of 3 layers and we can think of it like an iceberg:

I won't be too technical to describe what the dark web is, since it may need is own article. What I wanted to do this time was to build a web crawler for the dark web. So I didn't wanted to make a new one again. You may know that there is several successful web crawler running on the web such as google bot. The weakest link determines the strength of the whole chain. To be efficient, a distributed web crawler has to be well designed: it is important to eliminate as many bottlenecks as possible: as french admiral Olivier Lajous has said: Typically a efficient web crawler is designed to be distributed: instead of a single program that runs on a dedicated server, it's multiples instances of several programs that run on several servers (eg: on the cloud) that allows better task repartition, increased performances and increased bandwidth.īut distributed softwares does not come without drawbacks: there is factors that may add extra latency to your program and may decrease performances such as network latency, synchronization problems, poorly designed communication protocol, etc. It's basically the technology behind the famous google search engine. and allow user to search them using a search engine. and I love the theory behind them.īut first of all, what is a web crawler ?Ī web crawler is a computer program that browse the internet to index existing pages, images, PDF. I have written several one in many languages such as C++, JavaScript (Node.JS), Python. I have been passionated by web crawler for a long time.

0 kommentar(er)

0 kommentar(er)